Feihu Zhang Benjamin W. Wah

|

Abstract

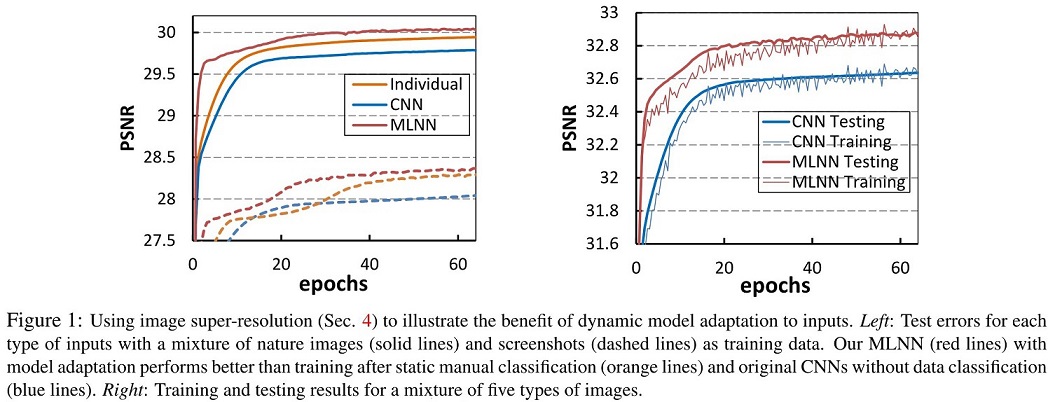

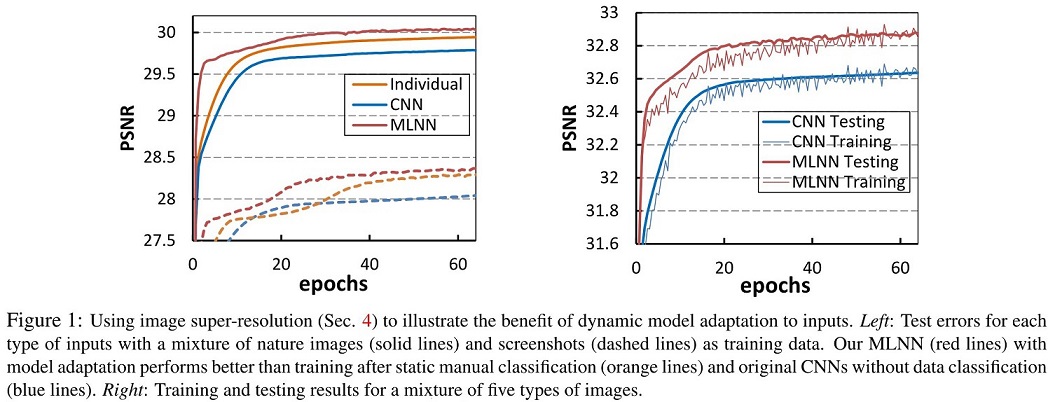

Data diversity in terms of types, styles, and radiometric, exposure and texture conditions widely exists in training and test data of vision applications. However, learning in traditional neural networks (NNs) only tries to find a model with fixed parameters that optimize the average behavior over all inputs, without considering data-specific properties. In this paper, we develop a meta-level NN (MLNN) model that learns meta-knowledge in order to adapt its weights to different inputs. MLNN consists of two parts: the dynamic supplementary NN (SNN) that learns meta-information on each type of inputs, and the fixed base-level NN (BLNN) that incorporates the meta-information from SNN into its weights to realize the generalization for each type of inputs. We verify our approach using over ten network architectures under various application scenarios and loss functions. In low-level vision applications on image super-resolution and denoising, MLNN achieves 0.1-0.4 dB improvements on PSNR, whereas for high-level image classification, MLNN has accuracy improvement of 0.4% for Cifar10 and 2.1% for ImageNet when compared to convolutional NNs (CNNs). Improvements are more pronounced as the scale or diversity of the data is increased.

Downloads

|

``Supplementary Meta-Learning: Towards a Dynamic Model for Deep Neural Networks.'' Feihu Zhang, Benjamin W. Wah. For ICCV 2017. |